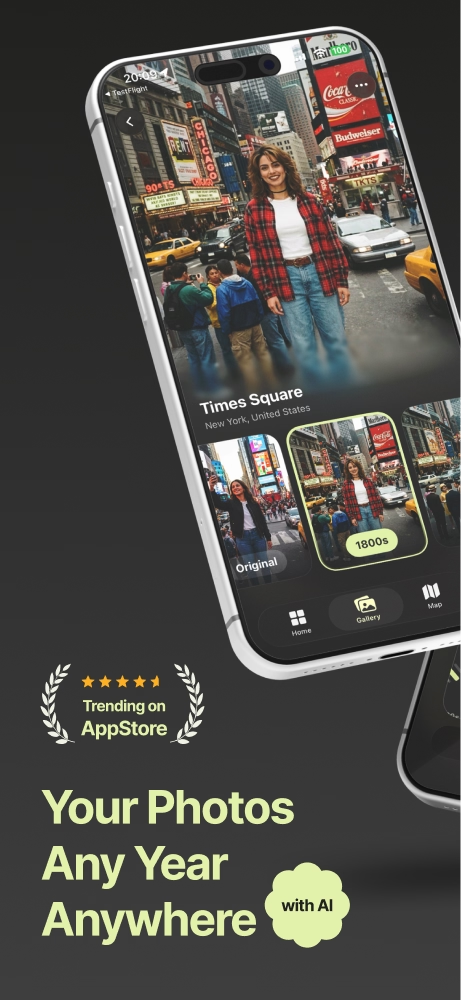

AI-Powered Historical Photo Transformation App

The Motivation

AI image generation created new creative possibilities, but most tools felt complex and disconnected from real life. With Portt Time Travel, I wanted to make history explorable through something everyone already has: their own photos.

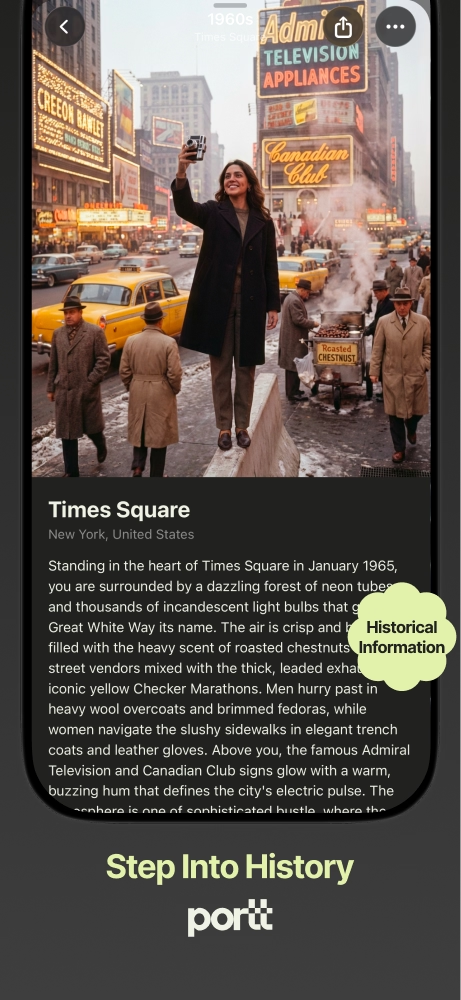

It started with a simple question: “What would this exact location look like in 1920?” Existing archives rarely show the specific spots we see every day. Portt closes this gap by combining:

- AI-powered visual transformation

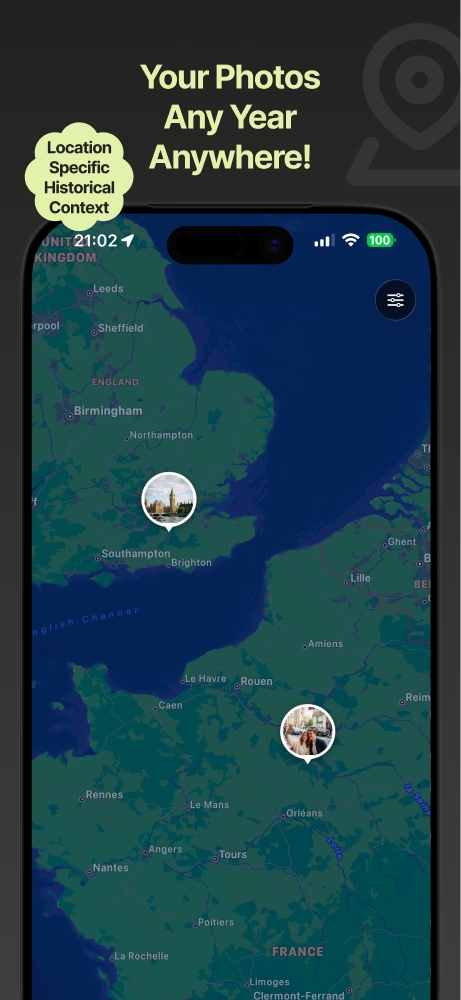

- Location-based historical context

This turns any photo into a personalized window into the past.

Beyond nostalgia, the project aims to:

- Make history tangible and relatable through personal photography

- Show real-world AI integration in a consumer app

- Lay the groundwork for a future social platform around historical exploration

Technically, the challenge is to coordinate multiple AI models (LLM for context, image generation for visuals) in a smooth mobile experience, while keeping the architecture ready to scale as a social network.

The Process

- Product Strategy: Wrote bilingual PRDs (TR/EN) defining MVP scope, user flows, and technical specs, while planning for future social and archive features.

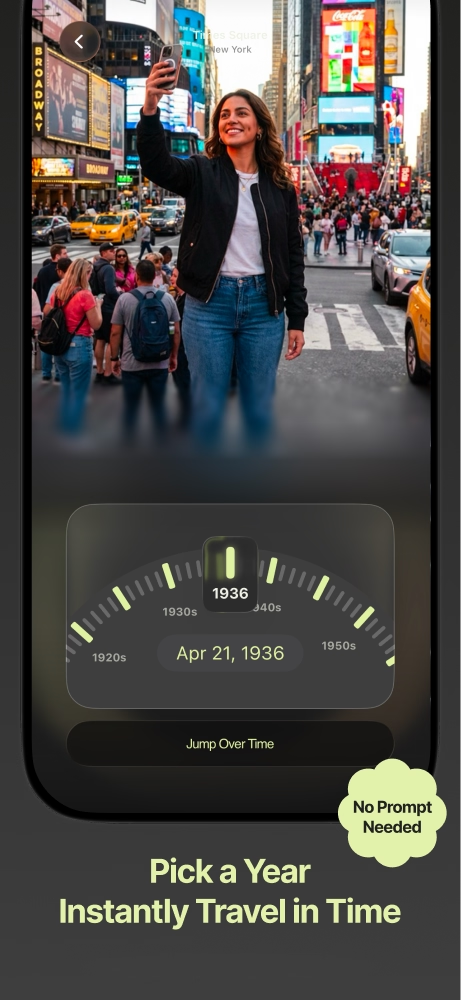

- AI Architecture: Designed a two-step pipeline:

- Gemini for historical context

- Nano Banana for image transformation ensuring historically grounded results, not generic filters.

- Tech Stack: Native iOS (Swift/SwiftUI), AVFoundation camera, CoreLocation + MapKit, async/await APIs, and a data structure ready for cloud and social features.

- Key Decisions: Modular APIs, flexible prompt system, social-ready data models, and an admin dashboard for managing prompts and models.

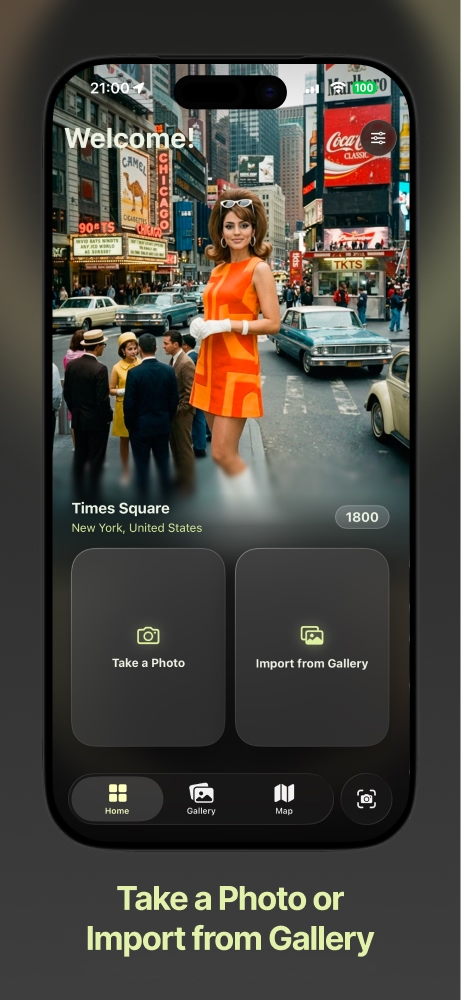

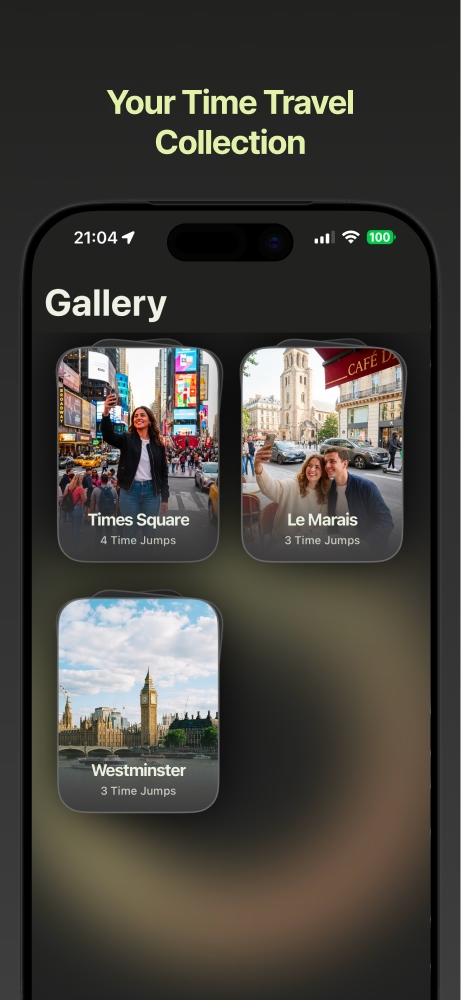

- UX & Design: Simple flow — Capture → Select Time → Transform → Share with a time wheel (1900s–2050s) and concise historical info cards.

- Tools & Ops: Figma for UI, Notion for documentation and tasks, plus a go-to-market checklist for ASO, analytics, and launch.

- Iteration & Collaboration: Built for continuous testing (prompt library, A/B tests, analytics, fallbacks) and worked closely with developers, keeping room for monetization and social pivots.

Results

MVP Deliverable

Shipped a development‑ready iOS MVP with clear acceptance criteria for:

- Camera integration

- AI processing pipeline

- Result display and sharing

All guided by a detailed PRD that doubles as a QA checklist.

Technical Innovation

Designed a scalable AI orchestration system that:

- Coordinates multiple LLM and image models for coherent historical output

- Allows fast switching between providers (Gemini, OpenAI, Claude, image APIs) without rewriting the core architecture

Foundation for Growth

Prepared social‑ready, archive‑ready data models, including:

- User authentication and profiles

- Social hooks (likes, shares, follows)

- Public/private content settings

- Space for future historical archive integrations

Documentation Excellence

Created layered documentation tailored to different needs:

- PRD: Technical specs + acceptance criteria

- Task database: 50+ structured items across build, marketing, and ops

- Go‑to‑market checklist: ASO, analytics, launch steps

- Prompt library: Versioned, optimized AI prompts

Strategic Positioning & Next Cycles

Positioned Portt for multiple directions after App Store feedback:

- Evolving into a social platform around historical sharing

- Integrating institutional archives (e.g., libraries, museums)

- Introducing premium features (pro tools, ad‑free, advanced edits)

- Exploring partnerships with tourism boards and cultural institutions

This setup balances today’s MVP constraints with a flexible roadmap for upcoming iterations once real users and App Review responses start coming in.

.avif)

.avif)